Lena Strauss

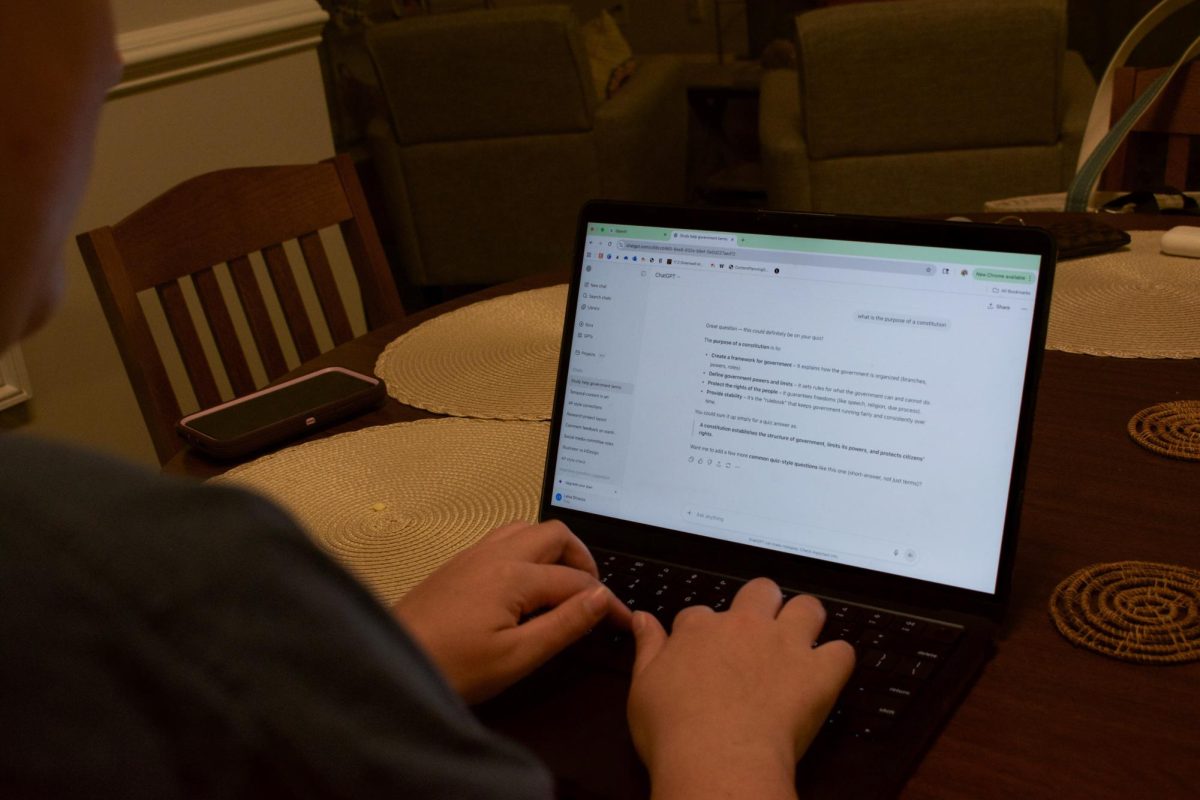

Lillian Smith ‘26, pictured working in her Senior Village apartment, uses AI as a study tool to prepare for upcoming exams.

As classes have commenced for the 2025-26 academic year, Wofford students have acclimated themselves to the expectations and workloads for their fall semester classes. However, the staple clause on artificial intelligence present in many syllabi seems to be realigning to include, rather than discourage, the use of such generative technologies.

Where the majority of courses in previous semesters have threatened major consequences and immediate Honor Council action for AI usage on assignments, several Wofford professors are becoming more accepting of AI in the classroom, with some even taking measures to weave it into their curriculum. In the wake of this drastic switch in the narrative of AI use, students may be left wondering what this could look like in the classroom.

The Wofford Honor Code categorizes the “unauthorized use of generative artificial intelligence to create content that is submitted as one’s own,” as plagiarism, yet this careful wording allows for the approved use of AI in other ways which could even be guided or suggested by professors.

Associate Professor of English Dr. Kimberly Hall is one of the leading voices on the topic of AI in Wofford classrooms. As covered in a fall 2023 issue of The Old Gold & Black, Hall was responsible for the initiatives of Wofford’s AI Working Group, a student-faculty team which explored how best, if at all, to incorporate AI into a college curriculum and lifestyle.

Hall proclaims that AI has much to offer that is not academically dishonest: she suggested that students can build “personalized learning agents” within Microsoft’s AI assistant, Copilot, to consult for more refined searches by feeding them their course materials. She argued that tools of this nature could facilitate personalized learning and problem solving when professors or tutors may not be accessible.

Some of the other points mentioned across the Wofford AI Working Group’s open discussion in 2023 were how AI tools can complement a liberal arts education specifically. Elon University’s “AI Guide for Students” released this year includes such advice for students, suggesting how to use AI for creative and numerical work while also cautioning students that it cannot fully replace their own thinking.

“Struggling with ‘What is my idea? How do I put it into words? What is the best way to organize this information?’ – those are the skills that we want (students) to have because if you don’t have them, you can’t evaluate the output that AI gives you,” Hall said.

However, the intentions of educators like Hall cannot mitigate the reach of AI or control how students choose to use it in an analytical sense. Since the initial year-long session held by Wofford’s AI Working Group, AI resources like ChatGPT have irreversibly advanced. Much of this change in AI policy at Wofford seems to recognize that its use and popularity is largely outside of anyone’s control and raises questions on how to prepare students to encounter AI in the professional world.

As AI inevitably invades the workplace, the task of educators and institutions is now to ensure that their students have skills that AI cannot replicate and are able to function in tandem with the tool.

This responsibility to create career-readiness conflicts on the ethics of colleges encouraging AI. Data centers to support these technologies noticeably deteriorate the water supplies and environments of surrounding communities. Is it responsible for any college to incorporate rather than condemn AI because of these tradeoffs?

This concern is more relevant than ever at Wofford, as Spartanburg is poised to gain its own data center. According to the Post & Courier, NorthMark Strategies has plans to build one of these supercomputers on Pine Street.

AI use at any university remains a complex and nuanced issue, primarily because of how new and ever-changing the technology is for students and professors alike.

“I don’t even think we can ever get ahead of (AI),” Hall said. “I think we’re like ‘How do we learn to live with this?’ What is the reality of higher education when this is everywhere?”

At the very least, the conversation on ethical and academically equitable AI use remains open at Wofford. Hall assures that Wofford’s discussion-based approach to education will never fundamentally change. However, it is still worth engaging with the different social, environmental and academic impacts of this issue.