Artificial Intelligence softwares are changing the technology landscape and could have the potential to disrupt many industries, including education. With the ability to auto-generate words and images, many people see the potential for rising problems, and opportunities.

One of the most common and widely accessed platforms is OpenAI’s Chat GPT, which can generate text on its own. Released to the public in Nov. 2022, the application reached one million users in just five days.

The first step in working with programs like Chat GPT is understanding them.

GPT stands for Generative Pre-trained Transformer. The application works by using a programming model to process the text it is generating, leading to the creation of more coherent responses. In simpler terms, it “reads” from a source of millions of texts on the internet to create digestible language outputs.

The other prominent feature of Chat GPT is its ability to engage in conversation. After writing a response to a question, it can receive commands: “make it shorter,” “make it longer,” “make it sound like a fifth grader wrote it.”

By understanding commands and language, it can adapt text to fit the user’s needs.

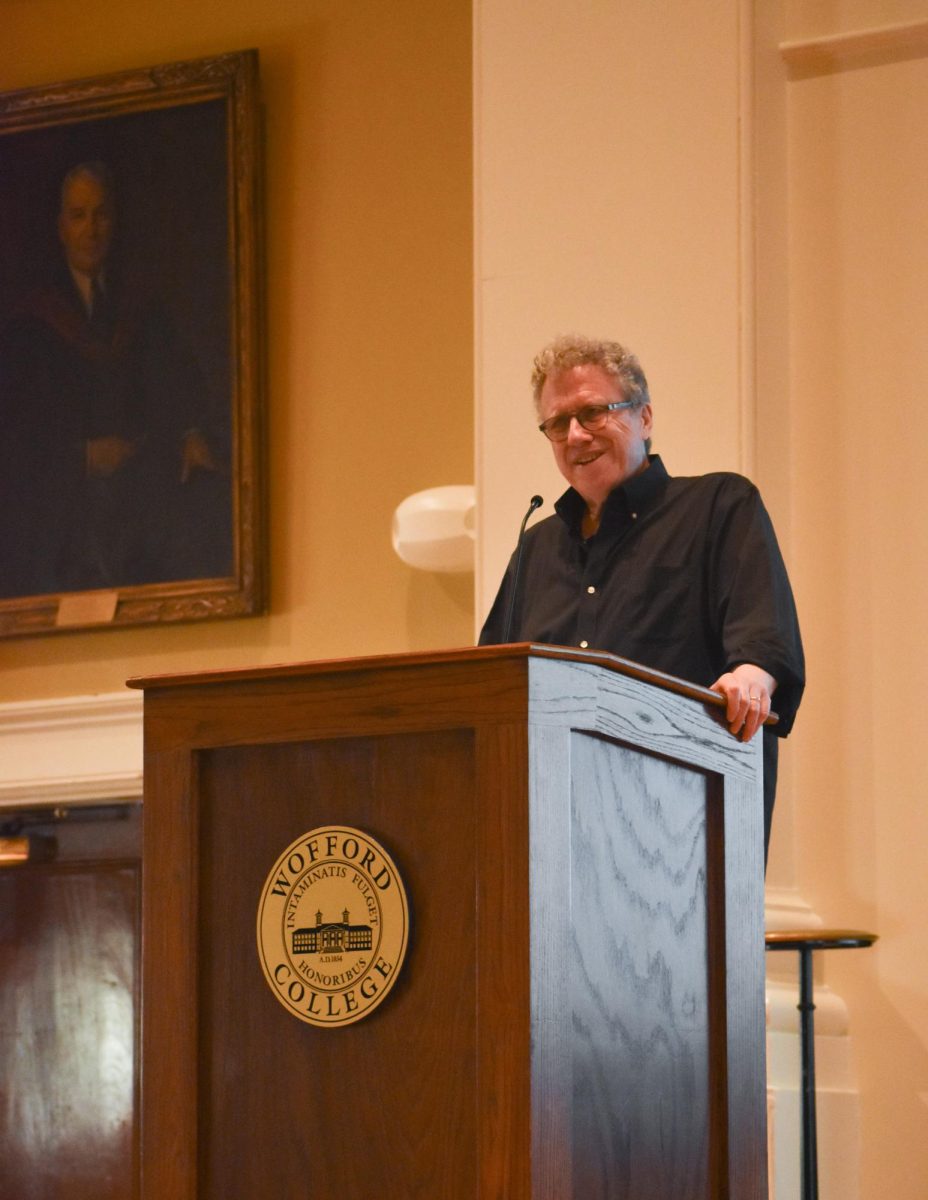

Aaron Garrett, associate professor and chair of computer science, has had some experience working with Chat GPT, and described it as an advanced “auto-fill” or “chat-bot.”

“It’s not smart. It doesn’t ‘understand’ anything, and that’s one of the big confusions,” Garrett said. “It’s fluent because it has, in some sense, internalized the statistics of English.”

One of the major insights Garrett provided was not just what Chat GPT can do, but what it cannot.

“It can tell you something, but it can’t tell you how it knows it, or how it knows it’s right,” Garrett said.

As many users have found, Garrett included, Chat GPT cannot cite sources. Multiple Reddit threads describe a problem where Chat GPT will confidently “cite” a source which does not exist, creating web pages or articles that were never written.

“It looks very convincing, but if you check it, the source doesn’t exist,” Garrett said.

This is because Chat GPT can recognize what a source is supposed to look like in a certain format, but it cannot actually search for that source.

Garrett expressed that this was his main concern with Chat GPT when it comes to academic use.

“Students will go to Chat and say, ‘Hey, write X.’ And it will. And the answer will be convincing but totally wrong,” he said.

When asked about whether using Chat GPT should be considered strictly plagiarism, Garrett believes that it is a more complicated discussion.

“If I ask Chat to write a story, can I publish that and put my name on it? Did I write it? I mean, I did give it the prompt and honestly the prompt is everything. Does that story belong to me or does that story belong to it? Or is it a combination? I didn’t write the words, but it wouldn’t have told the story if I hadn’t given it the prompt,” he said.

Like many others, Garrett believes there will have to be many discussions over the copyright in the future.

Furthermore, Garrett expressed there is no real reason to “fear” Chat GPT, as it is not sentient.

The main concern in the Artificial Intelligence field is “general” intelligence: a point at which AI can build categories and begin solving problems beyond its specific designation, something Garrett believes is still further away.

“Right now it’s like an elaborate spreadsheet that has learned the features of English to the point that it is eloquent and it has “learned” a bunch of facts and even interpretations,” Garrett said.

Currently, he uses it for creative projects outside of academic work, and thinks it’s a fun tool to play with.

“It’s dumb, but it’s very convincing. It’s like your dumb fluent friend,” he said.

Donner Rizzo-Banks, contributing writer